Kubernetes和微服务监控体系_哔哩哔哩_bilibili

谢谢bobo老师

1. 如何构建微服务监控体系

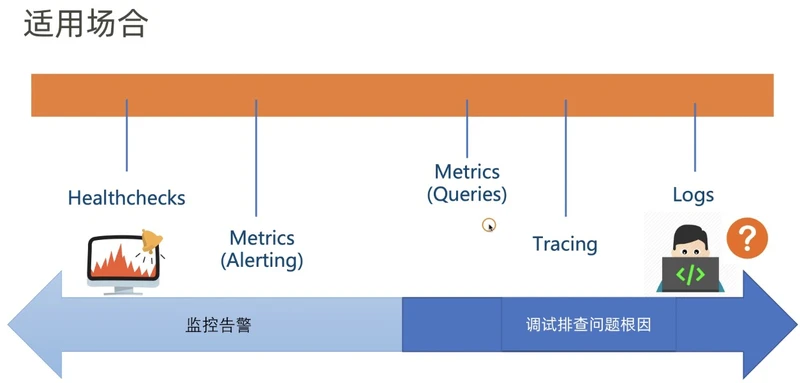

Metrics:度量指标监控【Time, Value】,在离散的时间点上产生数值点

Logging:日志监控

Tracing:调用链 结构

Healthchecks:健康检查【可以定期检查某个应用的存活状态】

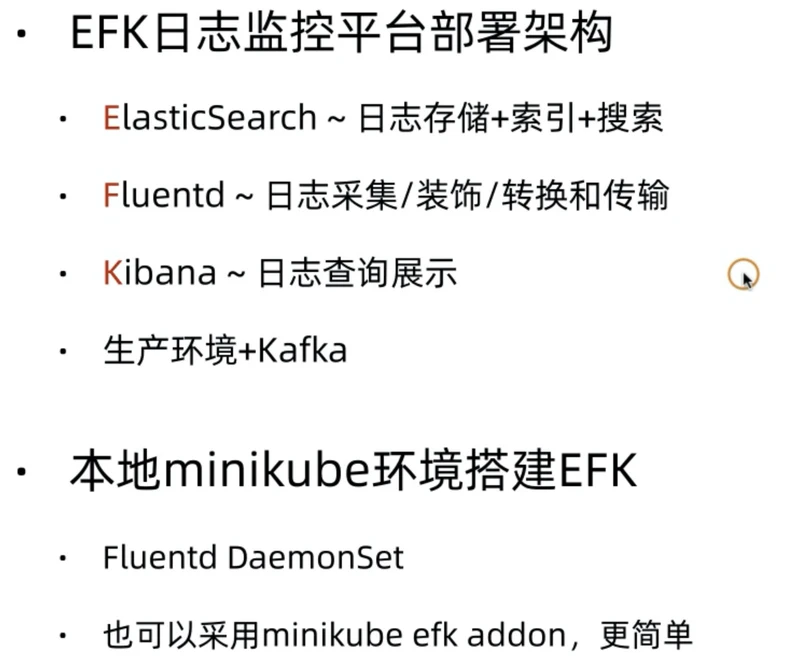

EFK 日志平台搭建

之前要查看pod的日志,我们采用的是kubectl logs命令,或者k8s的dashboard一个一个pod的方式进行查看。方式简单,但是效率地下,不适合大规模企业级的监控

企业一般采用集中式的日志平台:如,EFK

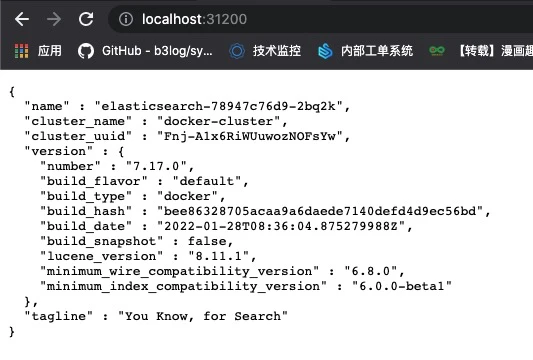

elastic:分布式的搜索引擎系统,简称ES,可以用于日志数据的集中存储和检索

fluentd:日志采集组件,可以实现日子的采集、装饰、转换、传送等功能

kibana:基于es的查询展示界面,也可以进行数据分析和大数据展示

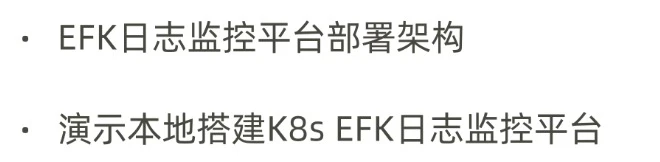

注意:fluentd在k8s中以DaemonSet的方式存在

DaemonSet和ReplicaSet是对应的概念

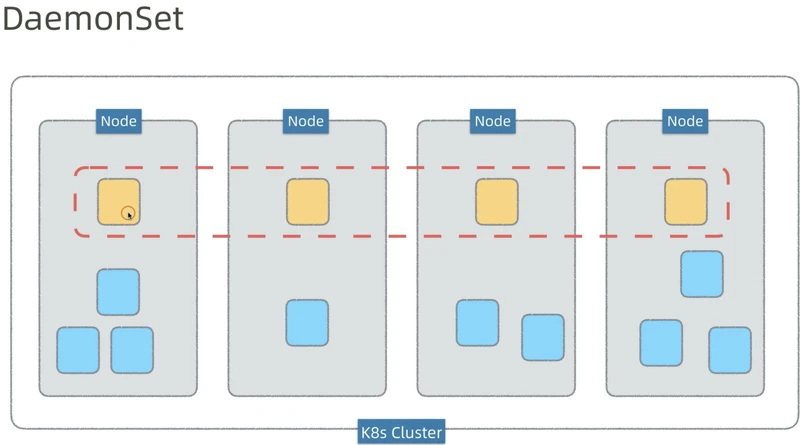

本地发布所有的EFK:

ns.yml

apiVersion: v1 kind: Namespace metadata: name: loggingelastic.yml

apiVersion: apps/v1 kind: Deployment metadata: name: elasticsearch namespace: logging spec: selector: matchLabels: component: elasticsearch template: metadata: labels: component: elasticsearch spec: containers: - name: elasticsearch image: elasticsearch:7.17.0 env: - name: discovery.type value: single-node ports: - containerPort: 9200 name: http protocol: TCP resources: limits: cpu: 500m memory: 1Gi requests: cpu: 500m memory: 1Gi --- apiVersion: v1 kind: Service metadata: name: elasticsearch namespace: logging labels: service: elasticsearch spec: type: NodePort selector: component: elasticsearch ports: - port: 9200 targetPort: 9200 nodePort: 31200kibana.yml

apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: logging spec: selector: matchLabels: run: kibana template: metadata: labels: run: kibana spec: containers: - name: kibana image: docker.elastic.co/kibana/kibana:6.5.4 env: - name: ELASTICSEARCH_URL value: http://elasticsearch:9200 - name: XPACK_SECURITY_ENABLED value: "true" ports: - containerPort: 5601 name: http protocol: TCP --- apiVersion: v1 kind: Service metadata: name: kibana namespace: logging labels: service: kibana spec: type: NodePort selector: run: kibana ports: - port: 5601 targetPort: 5601 nodePort: 31601fluented-rbac.yml

apiVersion: v1 kind: ServiceAccount metadata: name: fluentd namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: fluentd namespace: kube-system rules: - apiGroups: - "" resources: - pods - namespaces verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd roleRef: kind: ClusterRole name: fluentd apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: fluentd namespace: kube-systemfluentd-daemonset.yml

apiVersion: v1 kind: ServiceAccount metadata: name: fluentd namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: fluentd namespace: kube-system rules: - apiGroups: - "" resources: - pods - namespaces verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd roleRef: kind: ClusterRole name: fluentd apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: fluentd namespace: kube-system

(1). 先发布名字空间,因为有依赖关系

(2). 发布es

(3). 发布kibana

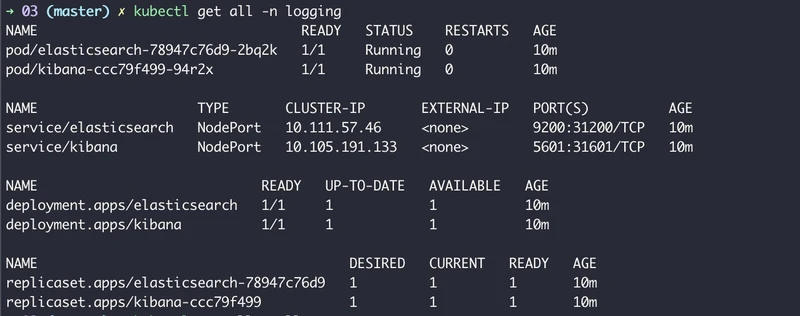

验证:【拉取镜像,可能需要等待一段时间才可以】

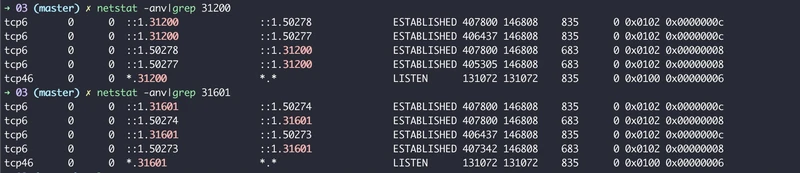

验证服务端口:

验证elastic:

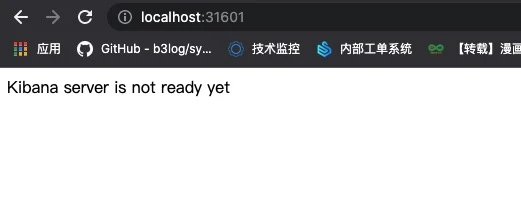

验证kibana:【后续专享研究吧-保留这个问题】

4. 使用kibana查询日志

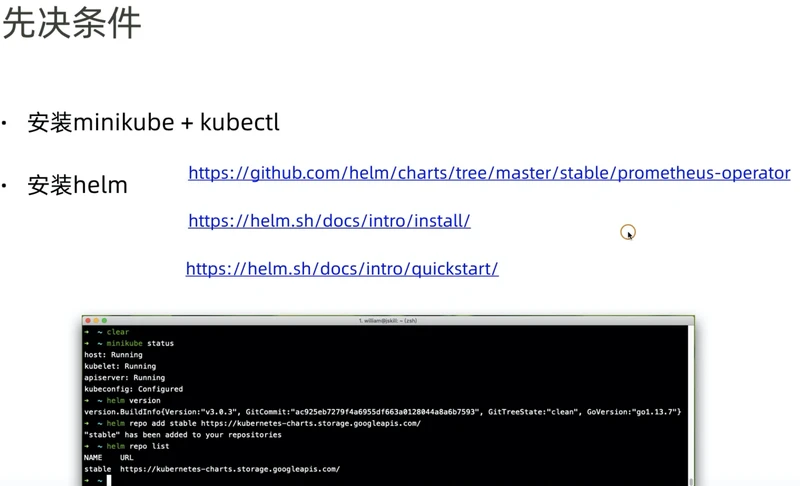

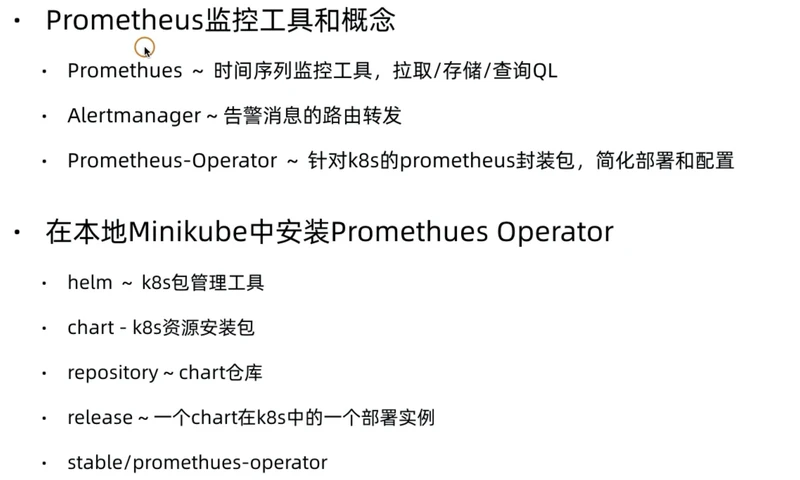

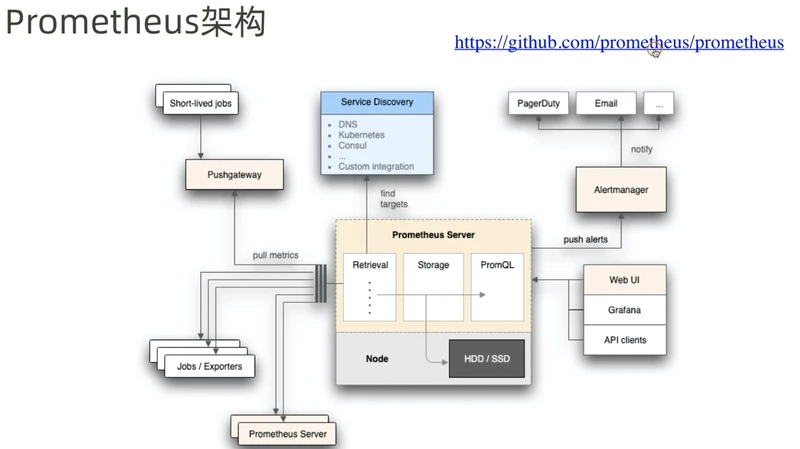

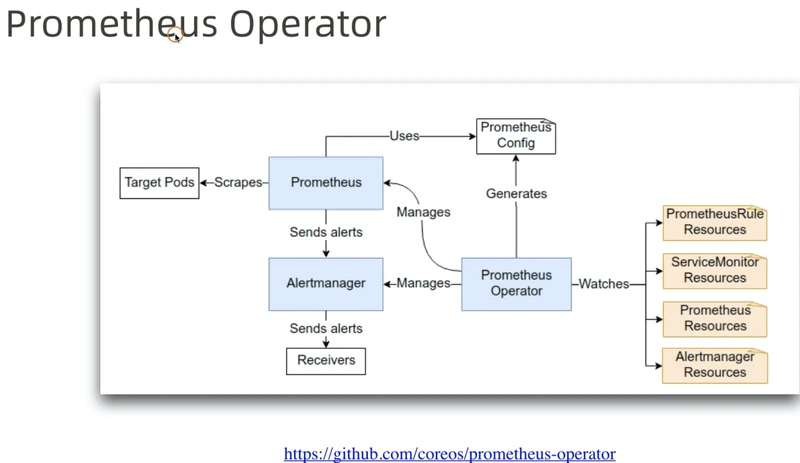

5. prometheus度量指标监控平台搭建

Helm | Quickstart Guide

下面通过helm安装prometheus operator

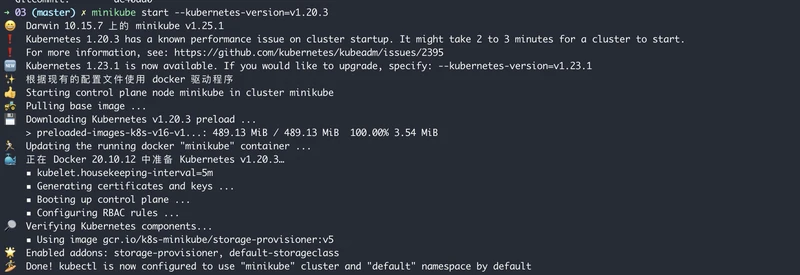

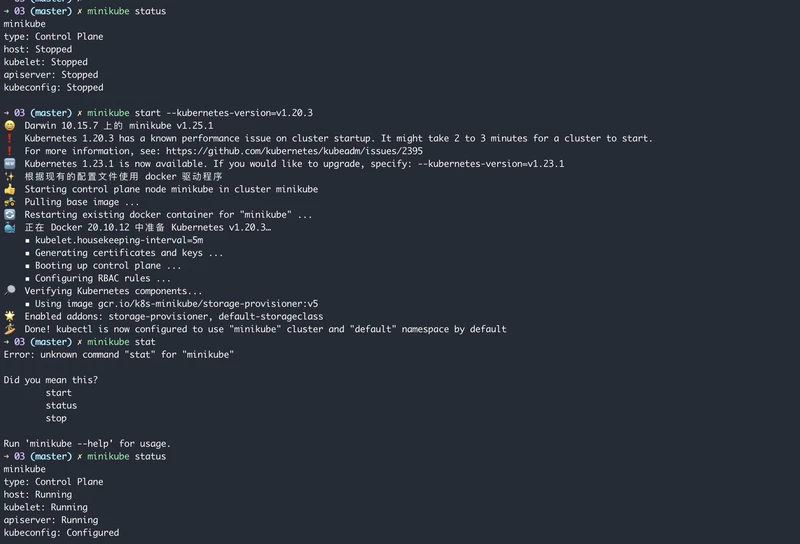

【注意:之前的版本。--kubernetes-version=v1.23.1】

helm安装会出现“”

mac@1987demac:~$ helm install prometheus-operator stable/prometheus-operator -n monitoring WARNING: This chart is deprecated Error: INSTALLATION FAILED: failed to install CRD crds/crd-alertmanager.yaml: unable to recognize "": no matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beta1"解决:卡点5:helm 安装prometheus-operator 失败 – 阿丁运维

降低我们的版本

所以:这里先保证我们的minikube启动

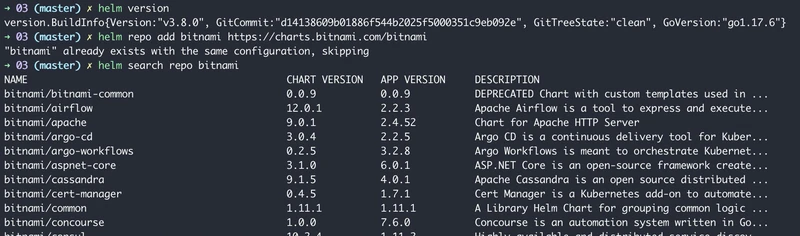

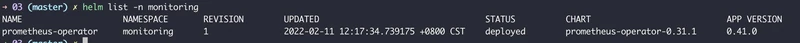

安装并验证:

kubectl create ns monitoring

helm install prometheus-operator bitnami/prometheus-operator -n monitorning

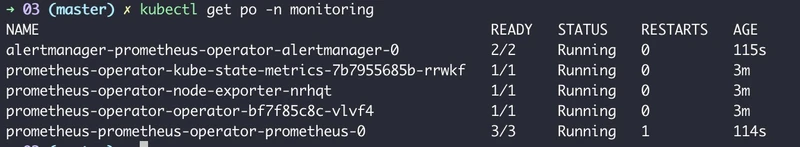

➜ 03 (master) ✗ kubectl create ns monitoring namespace/monitoring created ➜ 03 (master) ✗ helm install prometheus-operator bitnami/prometheus-operator -n monitorning WARNING: This chart is deprecated W0211 12:16:56.578352 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.603300 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.633336 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.710907 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.723861 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.740750 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:16:56.814739 11235 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" Error: INSTALLATION FAILED: create: failed to create: namespaces "monitorning" not found ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ kubectl create ns monitoring Error from server (AlreadyExists): namespaces "monitoring" already exists ➜ 03 (master) ✗ helm install prometheus-operator bitnami/prometheus-operator -n monitoring WARNING: This chart is deprecated W0211 12:17:32.474074 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.486881 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.499689 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.574838 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.585938 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.603611 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition W0211 12:17:32.664427 11366 warnings.go:70] apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" manifest_sorter.go:192: info: skipping unknown hook: "crd-install" NAME: prometheus-operator LAST DEPLOYED: Fri Feb 11 12:17:34 2022 NAMESPACE: monitoring STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: This Helm Chart was deprecated in favor of `bitnami/kube-prometheus`, in this issue (https://github.com/bitnami/charts/issues/3490) you can find more information about the reasons for renaming the chart ** Please be patient while the chart is being deployed ** Watch the Prometheus Operator Deployment status using the command: kubectl get deploy -w --namespace monitoring -l app.kubernetes.io/name=prometheus-operator-operator,app.kubernetes.io/instance=prometheus-operator Watch the Prometheus StatefulSet status using the command: kubectl get sts -w --namespace monitoring -l app.kubernetes.io/name=prometheus-operator-prometheus,app.kubernetes.io/instance=prometheus-operator Prometheus can be accessed via port "9090" on the following DNS name from within your cluster: prometheus-operator-prometheus.monitoring.svc.cluster.local To access Prometheus from outside the cluster execute the following commands: echo "Prometheus URL: http://127.0.0.1:9090/" kubectl port-forward --namespace monitoring svc/prometheus-operator-prometheus 9090:9090 Watch the Alertmanager StatefulSet status using the command: kubectl get sts -w --namespace monitoring -l app.kubernetes.io/name=prometheus-operator-alertmanager,app.kubernetes.io/instance=prometheus-operator Alertmanager can be accessed via port "9093" on the following DNS name from within your cluster: prometheus-operator-alertmanager.monitoring.svc.cluster.local To access Alertmanager from outside the cluster execute the following commands: echo "Alertmanager URL: http://127.0.0.1:9093/" kubectl port-forward --namespace monitoring svc/prometheus-operator-alertmanager 9093:9093 ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ ➜ 03 (master) ✗ kubectl get po -n monitoring NAME READY STATUS RESTARTS AGE alertmanager-prometheus-operator-alertmanager-0 0/2 ContainerCreating 0 8s prometheus-operator-kube-state-metrics-7b7955685b-rrwkf 1/1 Running 0 73s prometheus-operator-node-exporter-nrhqt 0/1 Running 0 73s prometheus-operator-operator-bf7f85c8c-vlvf4 0/1 Running 0 73s prometheus-prometheus-operator-prometheus-0 0/3 ContainerCreating 0 7s

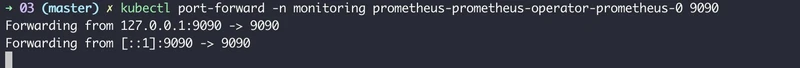

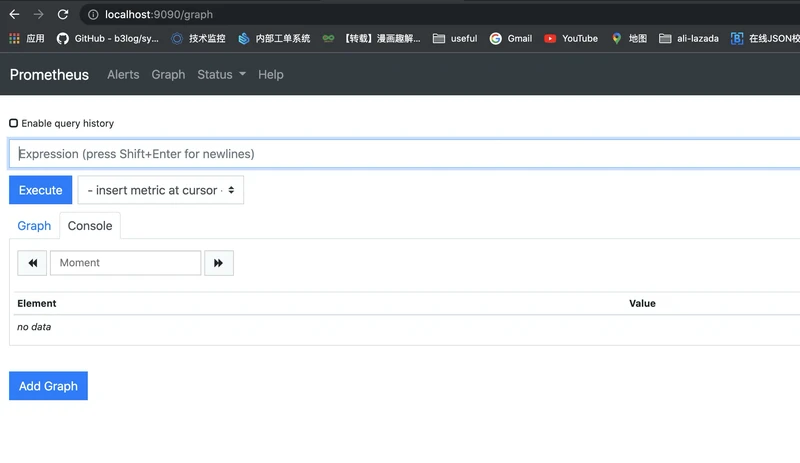

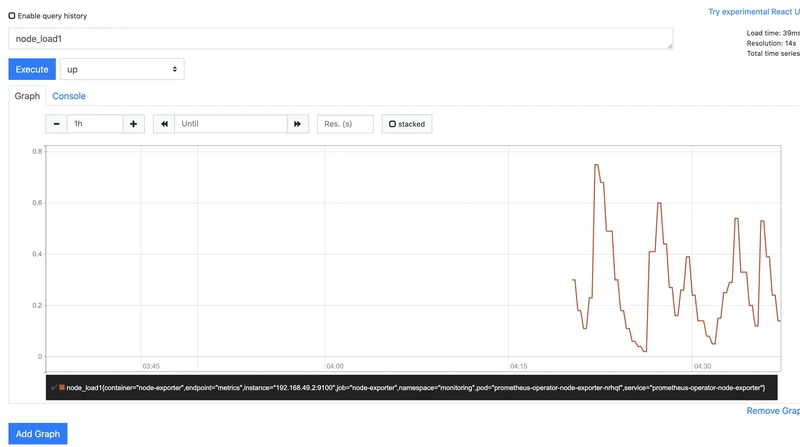

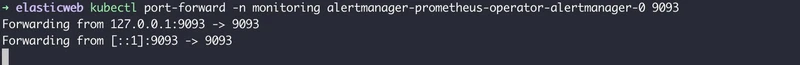

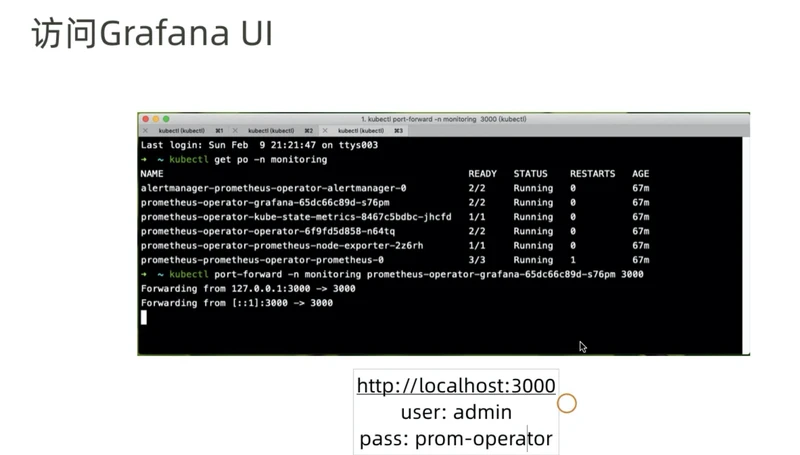

如何访问内置的浏览器呢?【端口转发】

prometheus-operator 已经帮我们把相关的监控项配置好了

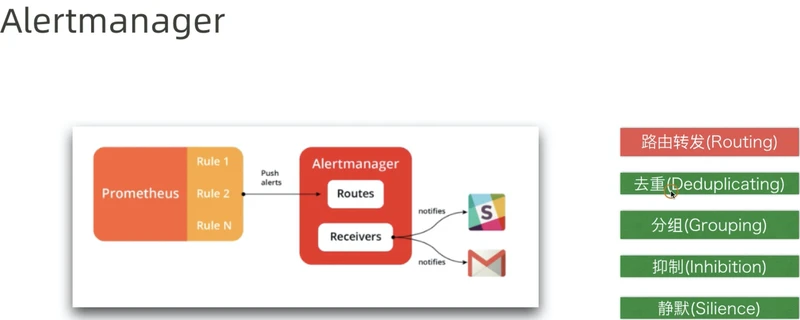

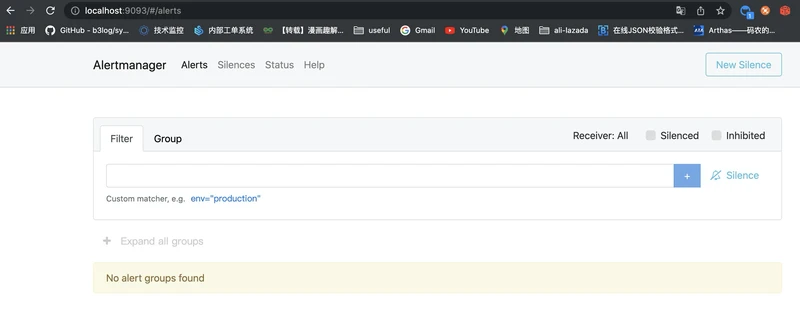

Alertmanager

如果需要grafana 的话

4. 定制Alertmanager邮件通知